“Siri, play some music”, “Erica, please check my account balance”, “Alexa, play cartoons in the kids’ room” - nowadays one phrase is enough to have everything done by devices. But what’s the starting point of “nowadays” and how can the devices understand us? Andrey, a Senior Developer of Noveo, tells about voice communication and how we found ourselves doing this today.

How Machines Understand Speech

Part 1

The idea of voice control of devices didn’t pop up in the heydays of scientific and technical progress - our predecessors were driven by the desire to have such an option back in the days, one can witness it in fairy-tales of yesterday: “Open sesame!”, “Stop, little pot!”, “Mirror, mirror on the wall…” - all these phrases that are familiar since childhood not only demonstrate the idea of voice control, but also the concept of interaction protocol with devices long before first galvanic elements existence, not to mention electronics.

Among the first devices provided with implementation of sound control in real life were acoustic switchers (acoustic relays), one of its well-known are the lightning systems controlled by particular sounds, e.g. clapping, as a rule. Systems of this type are certainly not controlled by voice commands, however, they use the same physical principles and the microphone is their essential part, the difference is only in sound signal processing.

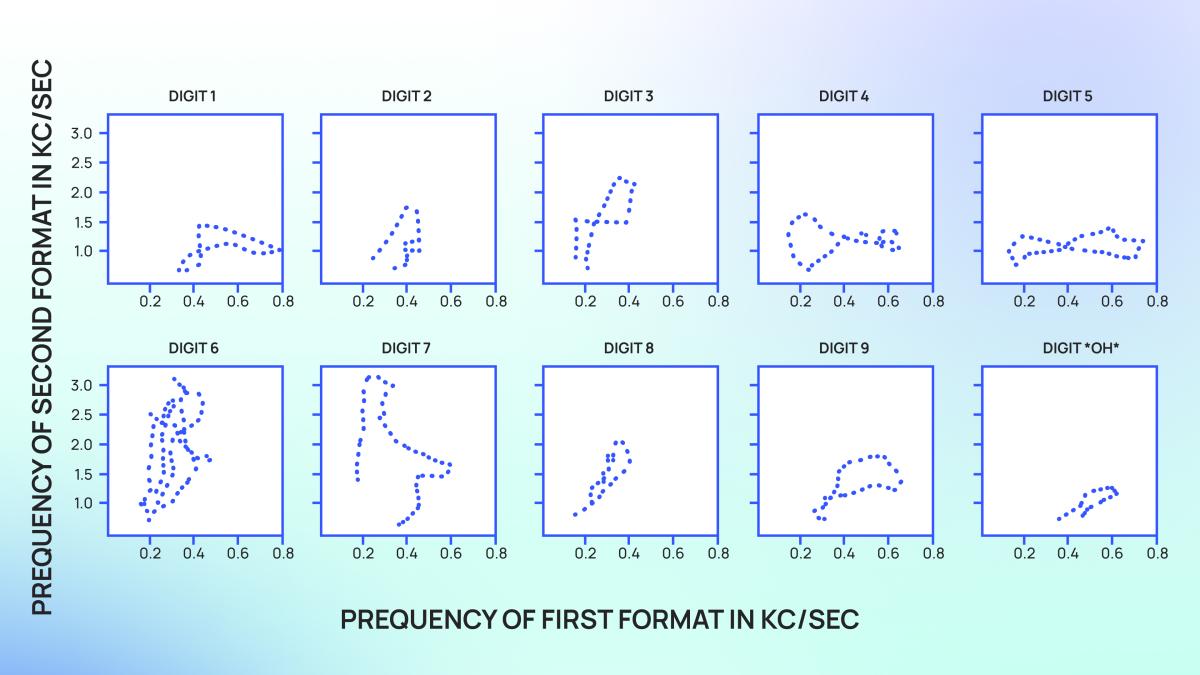

Considering speech recognition, the experiments have been conducted at least since the middle of the 20th century. In 1952 Bell Telephone Laboratories (Bell Labs), American Telephone and Telegraph Company (AT&T) scientific department presented Audrey - a machine that could “understand” human speech. Audrey was able to recognize digits from 0 to 9. Speech recognition has become possible due to successful completion of the sub-task - speech synthesis, gained by Bell Labs in the second half of the 20th century. Back in the days speech synthesis, as well as its recognition, were based on representing sounds as formants, a set of resonant frequencies created by the vocal cords, tongue and lips during speech. Recognition was performed by comparing speaker's formants to the pre-recorded samples. Unsurprisingly, the system was more accurate when the speaker's voice matched the recorded samples.

Advances in 1980s

In 1986 International Business Machines (IBM) presented Tangora - a typewriter with voice control. Its working mechanism was based on the use of Hidden Markov model: the machine calculated the probabilities that the sound processed can be the part of the words it is familiar with. Speech recognition method change led to significant increase of the machine vocabulary, up to 20 thousand words. Tangora operated on an IBM PC/AT computer and required a 20-minute training session to adapt to a new user.

In 1987 Worlds of Wonder company released Julie dolls. The dolls understood 16 words and could run a dialogue via integrated speech synthesizer. They also had light and temperature sensors, whose readings were used to generate responses, making the conversations more meaningful. Like Tangora, Julie dolls required training to recognize a specific person's voice.

It’s worth noticing significant difference between these two devices and the tasks they deal with. The Tangora typewriter was a stationary device with relatively high performance and cost, offering acceptable quality in recognizing a large vocabulary. On the other hand, Julie dolls were portable devices with significantly lower performance and cost, designed to recognize a small set of words. Unlike Tangora, Julie did not need to convert speech into text to function.

While no technical documentation exists for Julie, it’s reasonable to assume its principles of operation were similar to later systems designed to recognize a small amount of voice commands.

Fundamental Principles of Speech Recognition

The speech recognition systems fundamental principles rely on the physical nature of sound and have remained largely unchanged since the mid-20th century. In modern research, a sound signal is defined as an integer vector representing sound pressure values measured at equal time intervals. Recognition involves dividing the signal into equal-length segments, transforming the data from the time domain into the frequency domain, and comparing it with reference signals.

The necessity of dividing the signal into segments and transitioning to the frequency domain depends on the specific task. For instance, when comparing an input signal to a small set of predefined commands, segmentation may not always be required. Carefully selecting a correlation function and a minimal similarity threshold can ensure sufficient accuracy in matching sound streams to command templates with minimal device performance requirements. Unfortunately, this task doesn’t always get the solution of proper quality. For instance, the voice control feature of the Siemens C55 mobile phone used a simplified method of signal comparison, sometimes failing to distinguish words like "accept" and "except" depending on the speech pattern.

Despite its seeming simplicity, voice control systems that apply the recognition principle mentioned above are widely used nowadays. Furthermore, there’s a number of solutions called modules that allow developers of electronic devices to implement voice control without additional work. Among the examples one can mention Voice Recognition Module (various producers have similar models) and Voice Interaction Module by Yahboom Technology.

In tasks involving speech-to-text conversion, speech recognition not by entire words but by syllables is known as the most effective approach. This is followed by adjusting the results based on neighboring syllables and the existing combinations of known words. The theoretical and practical aspects of such transformations will be covered in the second part of this article.