Introduction

It is hard to imagine modern software development process without CI/CD and covering codebase by unit tests. Firmware for MCU usually have smaller codebase and some teams don’t pay much attention for setting up these processes. Working on a new medical device, we discovered that the existing unit tests cover only parts of the code connected to program logic and calculations, but don’t cover calls to hardware at all. In this article we discuss necessity of such tests and consider a situation, when we needed to switch to another unit testing framework. And, of course, how we did it without significant efforts.

Testing hardware calls – why?

The answer to this question is simple – the reason remains the same for all codebase. We want to be sure that our code works as expected. Some devs could be deceived by the fact that they are using well-documented SDK API, and could decide that they can rely on it and don’t need to check them. Let’s imagine a prototype development: at any moment SDK version could be changed to the newer one, or even MCU version could be updated. It this case checking of hardware calls is required. Such migrations are also possible during the work on the new version of device, when the codebase had time to become large. And the last case when we may need to check HW calls is a mass production, when we want to check every device for defects. For this case special test firmware is created, and it could be easily based on the set of unit tests for HW.

One more problem – calculations checking can’t be performed on PC, because hardware is different with MCU, and some mathematical operations like floating point arithmetic could give different results.

Keeping in mind these theses, we started to fix our project.

Choosing framework

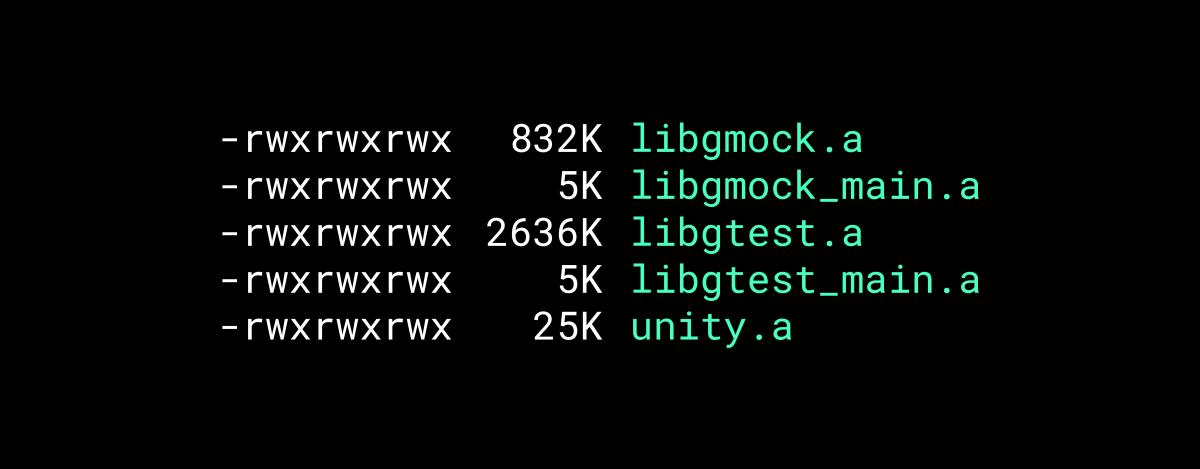

At the beginning GoogleTest framework for C++ unit testing was used in the project. It has a lot of advantages, but we won’t talk about them. In our case its disadvantages were more important, specifically the size of the built library. It was more than the whole device memory (256KB).

Of course, we could patch its codebase and leave only parts of code in use, reducing the final library size. But this approach looks like using of inappropriate tool, not the best solution. In parallel we’ve found simple and lightweight framework Unity (and no, it is not connected with game dev). It contains two headers and one source file, written on pure C (it could be a big advantage for teams who don’t use C++ for their firmware). We didn’t need to write complicated test scenarios, so we decided to choose this framework.

At this moment we used Unity for HW calls and GoogleTest for the rest. In general it worked, and we thought about stopping at this point, despite the fact that having 2 frameworks for one purpose at one time is overhead, but moving the existing base of test seemed to be a big work. But after a short time the problem has reappeared – as it was mentioned above, we needed to check the accuracy of calculations. It required duplicating tests for both frameworks, and it was unacceptable for us. We digged in the source code of GoogleTest and looked at the implementation of test macros. It seemed that we could easily write a converter GoogleTest => Unity, that would allow us to leave the existing test codebase unchanged.

Writing gtest2unity.h

Generally, the idea is rather simple – redefine macros of the old frameworks by ours, that use macros of the new framework. In this case we will be able to change the header without changing any line of code for test cases.

Let’s start with the simplest macros – macros for checks:

#define ASSERT_EQ(a, b) TEST_ASSERT_EQUAL(a, b)

#define ASSERT_NE(a, b) TEST_ASSERT_NOT_EQUAL(a, b)

#define ASSERT_TRUE(a) TEST_ASSERT_TRUE(a)

#define ASSERT_NEAR(expected, actual, delta) TEST_ASSERT_FLOAT_WITHIN(delta, expected, actual)

What is behind these macros is not interesting for us, because the parameters are the same, and we can be sure that the checks are the same, just by names. Both frameworks have a rather large set of checks, and probably there are some checks that are unique per frameworks, but for ordinary unit tests such problem doesn’t appear. Let’s move on to the more complicated part. What if GoogleTest specific API is used in existing tests?

class PumpDriverTests : public testing::Test

{

protected:

PumpDriver pumpDriver;

void SetUp() override

{

pumpDriver.init();

}

void TearDown() override

{

pumpDriver.deinit();

}

};

Ok, obviously we need our own class testing::Test, from which we can inherit. Let’s write a minimal version of it.

namespace testing

{

class Test

{

protected:

virtual void SetUp()

{}

virtual void TearDown()

{}

};

} // namespace testing

And the last part of code, dependent on GoogleTest:

TEST_F(PumpDriverTests, testInit)

{

//some test code here

}

At this point we go to the source code of GoogleTest, because it is rather hard to imagine what is hidden behind macro TEST_F (for those who are interested in the original code, see GoogleTest github and macro GTEST_TEST_ in gtest-internal.h). We found that there is a lot of code behind TEST_F, and we don’t need most of it. We defined the main points to adapt:

- Every test case is a separate class, inherited from our PumpDriverTests;

- Test case body is in fact an implementation of a private method of this class;

- There should be a function that creates an instance of this class and calls its methods consequently.

Let’s define a couple of macros for obtaining unique names for classes and functions:

#define EXTERN_TEST_F_NAME(test_group, test_name) \

test_group##_##test_name##_caller

#define TEST_F_CLASS_NAME(test_group, test_name) \

test_group##_##test_name##_Test

After that we implement the described points:

#define TEST_F(test_group, test_name) \

class TEST_F_CLASS_NAME(test_group, test_name) : public test_group { \

public: \

void implement(); \

friend void EXTERN_TEST_F_NAME(test_group, test_name)(); \

}; \

void EXTERN_TEST_F_NAME(test_group, test_name)() \

{ \

TEST_F_CLASS_NAME(test_group, test_name) t; \

t.SetUp(); \

t.implement(); \

t.TearDown(); \

} \

void TEST_F_CLASS_NAME(test_group, test_name)::implement()

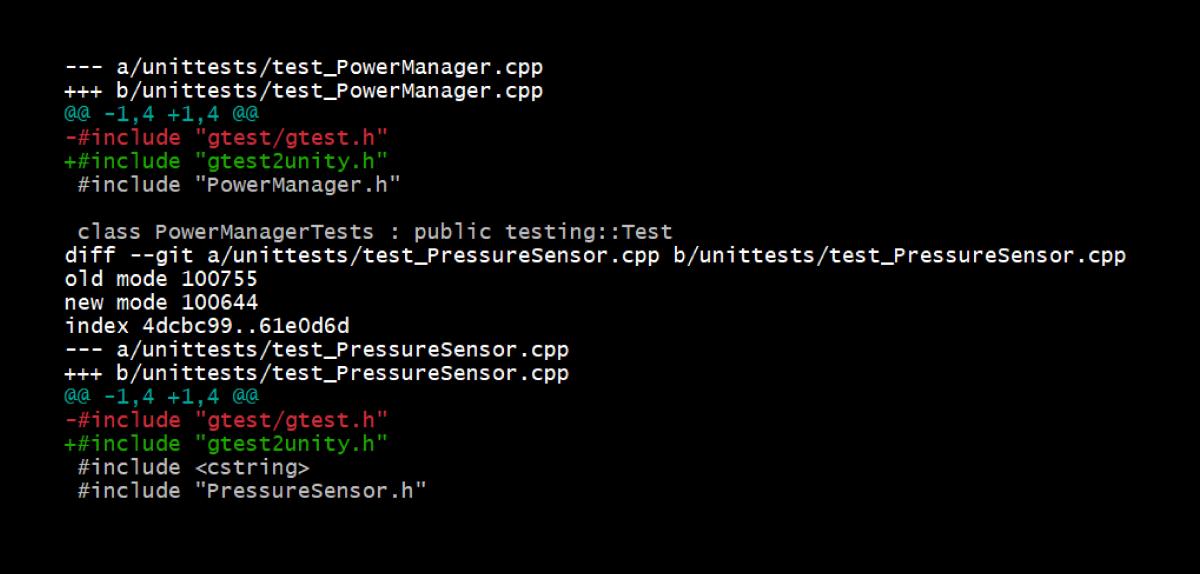

And that’s it, we have a working version of gtest2unity.h. Now the migration can easily be performed:

And, of course, don’t forget to add Unity files into the build system (CMakeLists.txt in our case). So, there is no compilation errors, and the work is done… or not? Successful compilation is good, but who will run our tests? GoogleTest does it for us automatically, but in Unity it should be done manually. Devs don’t like manual work, that’s why we decided to write a simple generator of test runners.

Generating test_runner.cpp

Launching Unity tests is an easy task:

int main()

{

UNITY_BEGIN();

RUN_TEST(test_function);

return UNITY_END();

}

And there is no sense in long thinking about a generator – let’s just choose Python and start! Parts before and after RUN_TEST plus headers including we hardcode in local strings. Then we parse source files of the existing tests and process them trivially – search for macro TEST_F, extract its parameters and put them into macro EXTERN_TEST_F_NAME twice: before main() with an extern declaration (tell the compiler that the definition is placed in another file), and the second time inside RUN_TEST to launch the test case.

When the information about all test cases is collected, the generator puts out final test_runner.cpp (don’t forget to add it to the build system too). Now both compilation and launching are ready to fail on unsuccessful code changes.

Conclusion

Finally, we would like to say that we don’t recommend using such tricks in non-exceptional cases. In our situation it was enough to take into account the necessity of testing SDK API calls and choose the right unit testing framework from the beginning. Unfortunately, we often work in non-ideal conditions, that’s why it is useful to keep in mind such approach.

Undoubtedly, the code from this article is far from ideal (and its hidden parts even more), but for our case it was enough, and we could continue working on the project, covering all the codebase with unit tests.